This article was featured in Zeomag

Index

Phone rings…

Alice: Hey! Bob, what’s up?

Bob: Nothing much,just trying to setup environment to run your application.

Alice:Oh!You have been doing that for quite sometime.Is that so difficult?

Bob: Yeah! it looks so intimidating. I mean,the amount of dependencies it requires for my operating system is huge.I am struggling a lot.

Today we are witnessing a paradigm shift in the way we used to write softwares. We are no longer being dictated by geography or platforms and people connect and share their ideas seamlessly.

This shift is more or less propelled by the Consumers and Developers teams who believe in the ideology of Less is more.

Hence, the workspace is getting diversified day by day,not only in terms of technical talent but also in terms of the technology stack.

We just overheard the conversation between Alice and Bob and we can identify the two major problems that can arise when cross platform integration takes place.

Firstly, development, testing and production environment can be different from each other. In such case,we have to tune the environment in all the three stages to run our application. This calls for huge amount of undesirable work that can delay the developmental cycle of the product.

Secondly, Each application will have a certain amount of dependencies which will be essential for its functioning.Therefore installing those dependencies becomes a prerequisite for running the application.

Hence it becomes essential to devise techniques to ship code in a standalone bundle so it can be tested and run in varied environments that too, coupled with all the dependencies.

Let’s see how Docker provides an answer to this.

Container contains content:

Before we start with Docker let’s first understand what makes Docker so useful. What is this hype about containers?

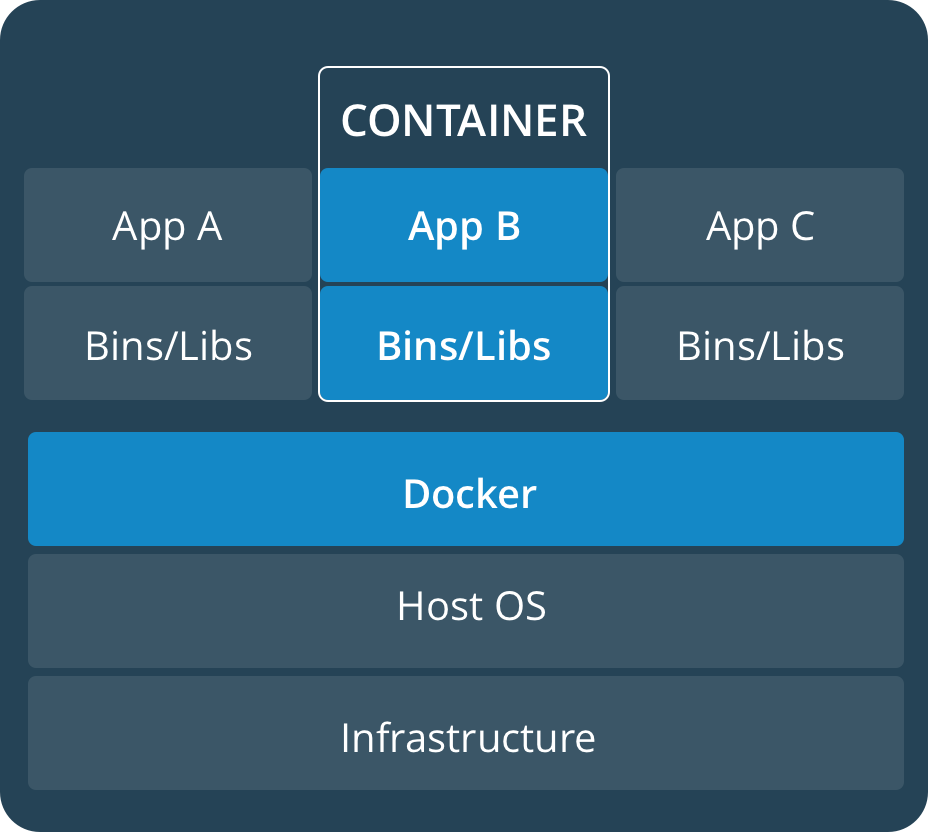

How container fits in

According to Docker:

“A container image is a lightweight, stand-alone, executable package of a piece of software that includes everything needed to run it: code, runtime, system tools, system libraries, settings. Available for both Linux and Windows based apps, containerized software will always run the same, regardless of the environment. Containers isolate software from its surroundings, for example differences between development and staging environments and help reduce conflicts between teams running different software on the same infrastructure.”

Therefore we can visualise containers as literally small, self sufficient code bundles that can run over the top HOST OS and can be used to mimic the same environment, each time they are run.

Docker allows you to package an application with all of its dependencies into a standardized unit for software development, known as containers.

Now since we have an idea of what Docker is and how containers make our life easier let’s get our hands dirty and dive deeper and understand some terms related to docker.

You will be encapsulating all your all code,runtime packages and libraries needed for your application in a single, lightweight, stand alone,executable bundle known as an Image.

When you will obtain a running instance of this Image by loading it into the memory you will have a Container. By default, it will be isolated (but can be interfaced with) the host environment. It is here, where your application will be running.

Dockerizing a simple Python Script:

Now let’s see how to dockerize a simple python script along with all the dependencies.

Problem Statement

You are given a list of 10000 integers and you need to compute the average.

Assembling the application:

Before we start thinking about how to dockerize the application we first need to assemble the application locally.Hence we make a folder dockPyApp which contains our dataset and the python script(pycode.py) to compute the average.

Now we have the recipe ready to calculate the average of the integers present in the file.

Dockerfile:

We now need to pack all our local assembly into a single executable package.Hence we will have to write a makefile kind of file that will tell docker how to make an image and what all libraries and runtime packages to include.This file is known as Dockerfile (Don’t save this file with any other name or extension)

Let’s have a look at what we have inside our Dockerfile:

So now our local directory will be having 3 files and it will look something like this:

Now we are ready to build the image, notice how we are trying to encapsulate all of our files( dependencies ) inside a single standalone, executable package. This is what we desired initially.

Building the image:

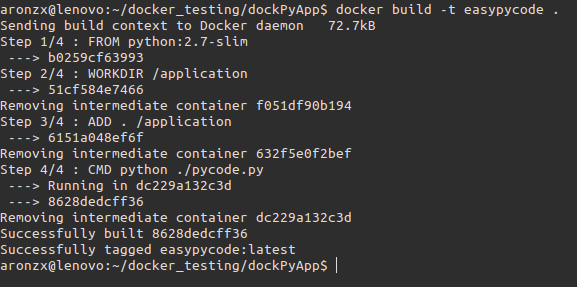

Now we are ready to build the image, let’s execute some docker commands for the same after navigating to the source directory of our application.In my case I am using Ubuntu 14.04(LTS) and the folder name where the files are stored is dockPyApp. Before running the commands please ensure you have the latest version of Docker installed on your machine.

On your terminal run (Don’t forget the ‘.’):

So here we are instructing Docker to find the Dockerfile in the current directory and build an image, we are also giving an alias easypycode to our image. Now the build command is going to execute our DockerFile as evident below.

We can further check the image using :

This will show all the images which are present on the system.

Firing up the container:

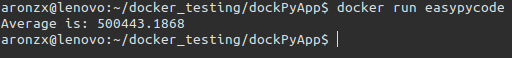

After making the image we need to execute it and obtain a running instance (container) in the memory.So we will execute the run command:

This will execute our python script and the successful execution will result into the output like this:

Now that we have a working image file it is time to show the portability the docker has to offer and tackling our problem of a single application running on a diverse platform.

So let’s upload our image on the Docker public registry and so that anyone can access our application without the platform constraints.

Uploading the image:

Before we proceed further make a new Docker account here.

After that we need to login into the public registry on our local machine.

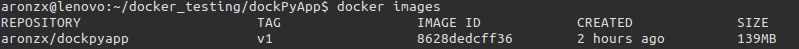

Now you need to associate the local image with a repository on the registry. You will also be giving a tag that will act like a version number for our image.

We can quickly check our tagged image locally

Finally, we have to publish our image on the registry so that people can

access it.

Finally, we have to publish our image on the registry so that people can

access it.

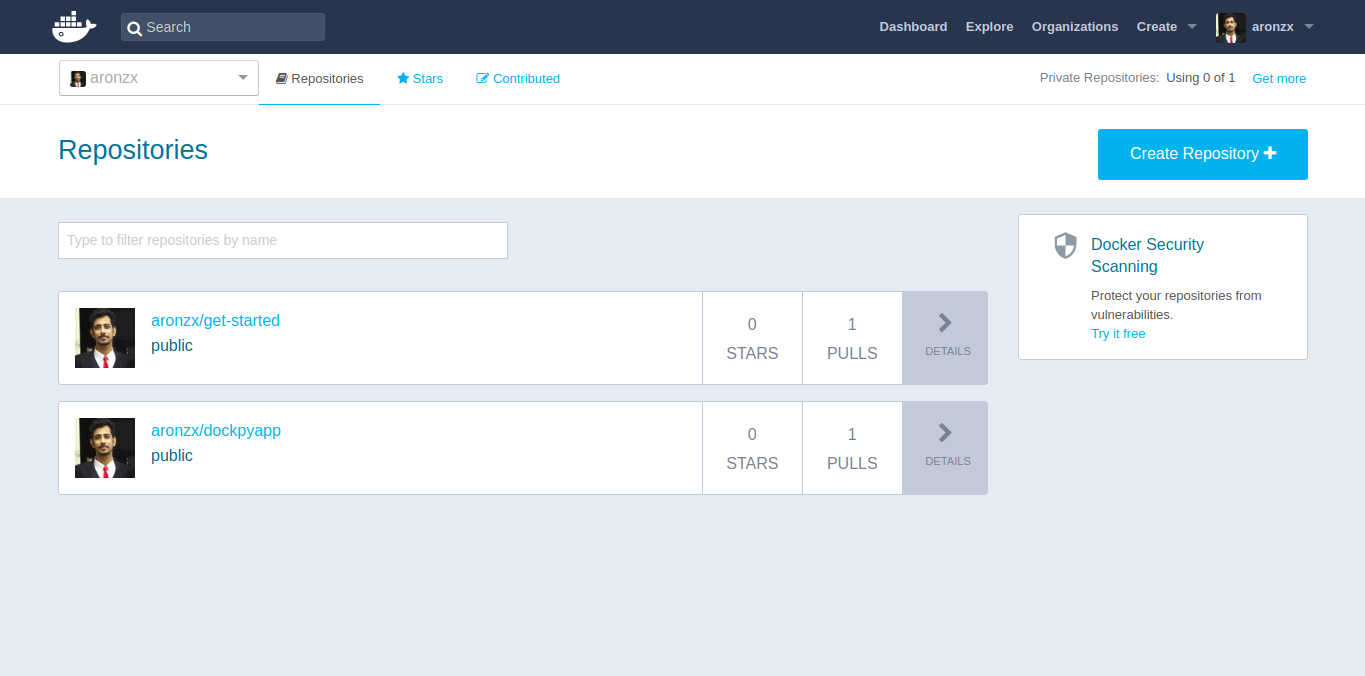

So now when you log in here, you can see your repository listed on the public registry.

Now people can easily run your application without worrying about the dependencies and suited platform. To run the image you can just use the run command with the repository name and if the image is not present locally, Docker will pull it from the repository.

Congratulations! You have successfully dockerized and uploaded your first python application. You are ready to conquer the world!

Get.Set.Dock!